- Getting Started

-

Administration Guide

-

Get Started with Administering Wyn Enterprise

- System Requirements

- Installing on Windows

- Installing on Linux

- Installing using Docker

- License Wyn Enterprise

- Deploying with HTTPS

- Deploying with Reverse Proxy

- Deploying to Azure App Service (AAS)

- Deploying to Azure Kubernetes Service (AKS)

- Deploying to AKS using Helm Chart

- Deploying to Local Kubernetes

- Deploying to Kubernetes Cluster using Helm Chart

- Deploying as a Virtual Directory or Sub-Application

- Deploying to Amazon ECS

- Deploying to Amazon EKS using Helm Charts

- Deploying in a Distributed Environment

- Migration from ActiveReports Server 12

- Upgrade Wyn Enterprise to Latest Version

- Logging on to the Administrator Portal

- Ports in Firewall

- Configuration Settings

- Account Management

- Security Management

- System Management

- Document Management

- How To and Troubleshooting

-

Get Started with Administering Wyn Enterprise

- User Guide

- Developer Guide

Monitoring Kubernetes Clusters

When deploying with the K8s cluster, logs can debug most issues. For complex scenarios related to the health of Kubernetes monitoring systems, such as pods, nodes, services, and networks, for which troubleshooting using logs is difficult, we can use APIs provided by K8s. The following sections describe using the K8s APIs to obtain health information and monitor them.

Create a Service Account and Role Binding

Define an option/function to enable or disable the permission of K8s cluster in the 'values.yaml' file as below.

investigationAccount:

enabled: true

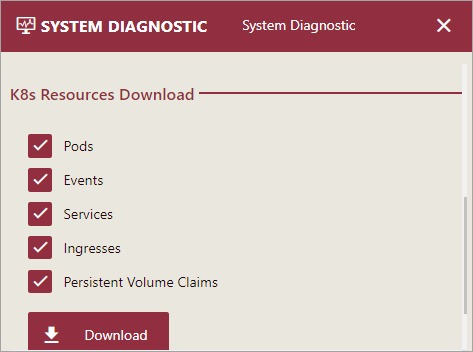

name: wyn-investigatorBy default, this function is disabled. If the customer enables this function, an environment variable named K8sInvestigation is passed to the Wyn server process. The Wyn server then provides the UI under the System Diagnostic option in the admin portal, to download the zip file and retrieve the K8s information.

The information on the following K8 resources is retrieved.

Pods

Services

Events

Ingresses

Persistent Volume Claims

The downloaded zip file contains several JSON files, and each file contains the original description text of the K8s resources.

To maintain the module independence, as well as reduce the package dependency conflicts, the logic of retrieving K8s resources is implemented in the service runner, and the Wyn server needs to send a request to the service runner when the customer requests the K8s information in the admin portal.

Currently, Wyn offers two deployment methods for K8s: via custom scripts or Helm charts.

When K8s is deployed using the custom scripts

In this case, if a user wishes to enable the functionality for collecting K8s information, additional steps are required than required when Helm charts are used, as outlined below.

Use Service Account to Run Wyn Services

1. Create the necessary service account, role, and role binding, grant the permissions to a role to fetch various pieces of information, and subsequently associate this role with the service account.

apiVersion: v1

kind: ServiceAccount

metadata:

name: "wyn-investigator"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: wyn-resource-reader

rules:

- apiGroups: [""]

resources: ["pods", "pods/log", "services", "configmaps", "events", "persistentvolumeclaims"]

verbs: ["get", "list", "watch"]

- apiGroups: ["apps"]

resources: ["deployments", "statefulsets", "replicasets"]

verbs: ["get", "list", "create"]

- apiGroups: ["networking.k8s.io"]

resources: ["ingresses", "networkpolicies"]

verbs: ["get", "list", "watch"]

- apiGroups: ["metrics.k8s.io"]

resources: ["pods"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: wyn-resource-reader-binding

subjects:

- kind: ServiceAccount

name: "wyn-investigator"

roleRef:

kind: Role

name: wyn-resource-reader

apiGroup: rbac.authorization.k8s.ioHere, wyn-investigator denotes the name of the service account, which users may modify as per their requirements

2. Assign this ServiceAccount to each Wyn's service by simply modifying the spec.serviceAccountName field and setting its value to the desired name of the user's chosen service account. Let's take 'server.yaml' as an example.

apiVersion: apps/v1

kind: Deployment

metadata:

name: wyn-server

spec:

replicas: 1

selector:

matchLabels:

app: wyn-server

strategy:

type: Recreate

template:

metadata:

labels:

app: wyn-server

spec:

serviceAccountName: wyn-investigator

containers:

- name: wyn-server

image: wynenterprise/wyn-enterprise-k8s:{WYN_VERSION}3. Configure an environment variable (K8sInvestigation:true) for the Server service (server.yaml) by adding it to the spec.containers.env section, as shown in the following example.

apiVersion: apps/v1

kind: Deployment

metadata:

name: wyn-server

spec:

replicas: 1

selector:

matchLabels:

app: wyn-server

strategy:

type: Recreate

template:

metadata:

labels:

app: wyn-server

spec:

serviceAccountName: wyn-investigator

containers:

- name: wyn-server

image: wynenterprise/wyn-enterprise-k8s:{WYN_VERSION}

env:

- name: K8sInvestigation

value: trueInvoke K8s APIs to obtain the metrics data

Whether using helm charts or custom scripts, to get the metrics data follow these steps to configure it.

1. Create the 'metrics-server.yaml' file and execute the command kubectl apply -f metrics-server.yaml. The contents of 'metrics-server.yaml' are as follows.

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

replicas: 2

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: metrics-server

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

k8s-app: metrics-server

namespaces:

- kube-system

topologyKey: kubernetes.io/hostname

containers:

- args:

- --cert-dir=/tmp

- --secure-port=10250

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls=true

image: registry.k8s.io/metrics-server/metrics-server:v0.7.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 10250

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

seccompProfile:

type: RuntimeDefault

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

minAvailable: 1

selector:

matchLabels:

k8s-app: metrics-server

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 1002. In 'values.yaml' of helm charts, set the value of investigationAccount:enabled to true; and in the custom scripts, add the environment variable K8sInvestigation:true to 'server.yaml'.

3. Access the API {wyn server host}:{wyn server port}/api/v2/admin/k8s/metrics; the response data schema is []PodMetrics

type PodMetrics struct {

unversioned.TypeMeta

ObjectMeta

// The following fields define time interval from which metrics were

// collected in the following format [Timestamp-Window, Timestamp].

Timestamp unversioned.Time

Window unversioned.Duration

// Metrics for all containers are collected within the same time window.

Containers []ContainerMetrics

}

type ContainerMetrics struct {

// Container name corresponding to the one from v1.Pod.Spec.Containers.

Name string

// The memory usage is the memory working set.

Usage v1.ResourceList

}Understanding CPU Usage Metrics in Kubernetes

When reviewing the metrics provided by the Metrics Server in Kubernetes, particularly focusing on CPU usage, it is important to understand the units and the context in which these metrics are presented.

Units of Measurement

CPU usage metrics reported by the Metrics Server are often measured in nanocores (n). A nanocore is a billionth of a single CPU core's capacity. This unit is used because modern systems can schedule tasks at very fine-grained intervals, and it allows for precise measurement of CPU resource consumption.

Interpreting CPU Usage

The CPU usage value indicates the amount of CPU time consumed by a container or pod over a specific time window. For instance, if a metric reports a CPU usage of Xn, this means the container utilized X nanoseconds of CPU time during the measurement period.

To translate this into more understandable terms:

1 core = 1000 milli-cores = 1,000,000 micro-cores = 1,000,000,000 nanocores

So, if a metric shows a CPU usage of Y nanocores, you can convert this to a percentage of a single core's capacity over the measurement period by dividing Y by 1,000,000,000 and then multiplying by the number of seconds in the measurement window. This will give you the fraction of a single core that was utilized.

Example Calculation

Let's say we have a metric with a CPU usage of 63886268n over a window of 14.784 seconds. To calculate the average CPU usage as a percentage of a single core:

Convert nanocores to cores:

63886268n / 1,000,000,000 = 0.063886268 cores.Calculate the average usage per second:

0.063886268 cores / 14.784 seconds = 0.004322 cores/second.Convert this to a percentage:

0.004322 * 100% = 0.4322%.

Therefore, the container averaged approximately 0.4322% of a single core's capacity during the measurement window.

Remember, these metrics reflect the actual CPU time consumed by the container, which might differ from the CPU limit or request set in the pod's configuration. The Metrics Server provides these real-time insights to help monitor and manage resources effectively within your Kubernetes cluster.